Tracking Chat-Based AI Tool Adoption, Uses, and Experiences

Leveraging the Neely-UAS Artificial Intelligence Index, we examine how widely adopted chat-based AI tools are, why people use them, and how users feel about them.

Artificial intelligence has been playing a growing part in everyday life for years, but its popularity has skyrocketed since the beginning of the COVID-19 pandemic. Part of this is due to improving technology that is now capable of running computationally intensive math on ever-smaller devices and in more places. Another part, though, is the first public release of multimodal artificial intelligence tools that anyone with an internet connection can use. One such application of artificial intelligence released in 2023 are generative text chatbots, like ChatGPT, Bard, and Claude. These chatbots are simple to use, create an alarmingly realistic sense of communicating with another person, and can help with all sorts of tasks ranging from writing an email to writing thousands of lines of code that can power a website or statistical analyses (much like those in this blog post!). Therefore, it wasn’t surprising when OpenAI’s chat bot (ChatGPT) was released it rapidly became one of fastest growing internet apps of all time.

With this rapid growth in adoption of AI tools and an estimated generative AI market value of $1.3T by 2030, we examined adoption of the chat-based AI tools. This is the second wave of our survey that looks at AI tool adoption, so we can see how its popularity has grown since our first survey in March 2023. In this new wave, we also increased our sample size from 1,965 to 9,936 US Adults from USC’s Understanding America Study and incorporated additional questions related to how often and for what purpose people use these tools.

How popular are Chat-based AI tools?

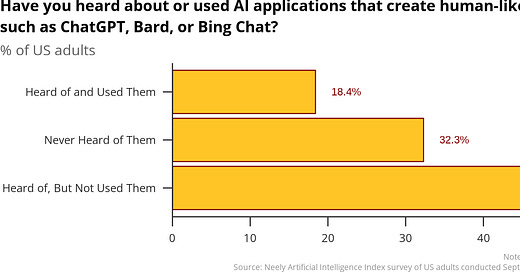

In May 2023, we found that just over 10% of US adults had used a generative text AI program. By October 2023, this number jumps to 18% of US adults. In other words, we see an 80% increase in usage of these chat-based AI tools in five short months. This increase is consistent with that observed by independent researchers and organizations including Pew, who also found that 18% of US adults say they’ve used chat-based AI tools.

Of these users, 22% report using chat-based AI applications at least once a week. Another 28% of users report using these applications once or a few times per month. Together, half of chat-based AI application users return to the application regularly.

Why do people use chat-based AI tools?

As more than one hundred million people interact with the chatbots every month and share their experiences, it’s clear that these chatbots can be used in seemingly innumerable ways. Pew conducted a survey asking people whether they used ChatGPT for entertainment, learning, or help at work. Each of these uses was similarly common (16-20% of users) in their July 2023 survey. We asked a related question, but changed that in two ways. First, we expanded the scope from only ChatGPT to all AI applications like ChatGPT, Bard, Bing Chat, and Claude. Second, we provided a more extensive list of reasons why people might use these applications to help us better understand the motivations and use cases.

The most commonly selected motivation was curiosity; 66% of US adults who used one of these chatbots did so out of curiosity. Just over a third of users reported using these chatbots for work (36.8%) or for entertainment (35.4%), and just over a quarter of users reported using these chatbots to learn something new (27.3%) or to improve their communications (e.g., writing an email; 26%).The least commonly selected reasons included supplementing one’s income (3.3%), obtaining mental health assistance (4%), and seeking social connection (5%).

How did these users feel about their chat-based AI tool experiences?

In addition to asking people about the frequency of and motivations for using chat-based AI tools, we also asked them four questions about their attitudes towards these tools:

How useful do you find AI applications such as ChatGPT, Bard, or Bing Chat?

In a sentence or two, please describe why your experience with AI applications such as ChatGPT, Bard, or Bing Chat could or could not be useful.

How harmful do you find AI applications such as ChatGPT, Bard, or Bing Chat?

In a sentence or two, please describe why your experience with AI applications such as ChatGPT, Bard, or Bing Chat could or could not be harmful.

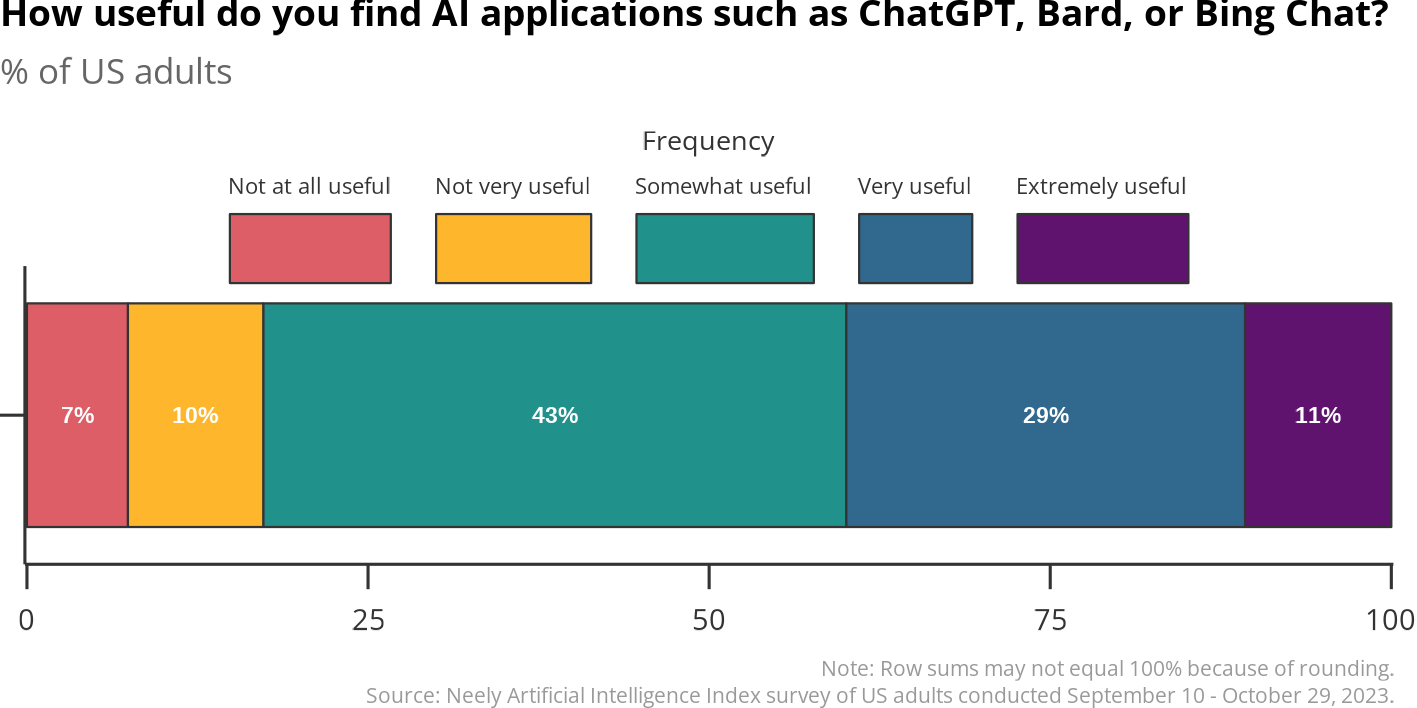

A large majority of users (83%) found these applications to be at least somewhat useful and just 7% of users stated that these applications were not at all useful. Additionally, 40% of users found these applications very or extremely useful compared to 17% who indicated the applications were not very or not at all useful. Taken together, the data clearly show that people find these applications to be useful.

When reviewing the open-ended text responses, most respondents also expressed that these types of AI applications are useful. The most common reasons people stated that their use of these applications were useful fell into three main categories:

Getting help with tasks like writing emails, speeches for social events, and creating lesson plans,

Saving time in trying to obtain customer service and in summarizing longer texts/papers, and

Getting help with technical projects, like creating website templates or writing code.

On the other hand, the most common reasons these AI applications were not useful fell into three main categories:

Not trusting the accuracy of the information produced,

Failing to produce desired output,

The generated output seeming too obviously generated by an AI bot.

All in all, though, far more respondents provided reasons why their experience with chat-based AI applications was useful than was not useful.

The answer to the question of whether people find these applications harmful is less clear. 51% of users state that these applications are at least somewhat harmful while 49% state that these applications are not very or not at all harmful. Just 11% felt that these applications were very or extremely harmful.

When reviewing the open-ended text responses, most respondents also expressed that these types of AI applications are not harmful. Most respondents stated that these applications would help them save time and improve the quality of their own manual work, and for many of the same reasons described in the previous question pertaining to why they found these applications to be useful. The main harms that were mentioned fell into three main categories:

People expressed concern over how these tools might “replace humans at work” and allow companies to conduct more layoffs.

Students could use it to cheat on school assignments.

The information provided by these applications is not always accurate, and can be dangerous if people do not fact-check the output before disseminating it.

Who uses chat-based AI tools?

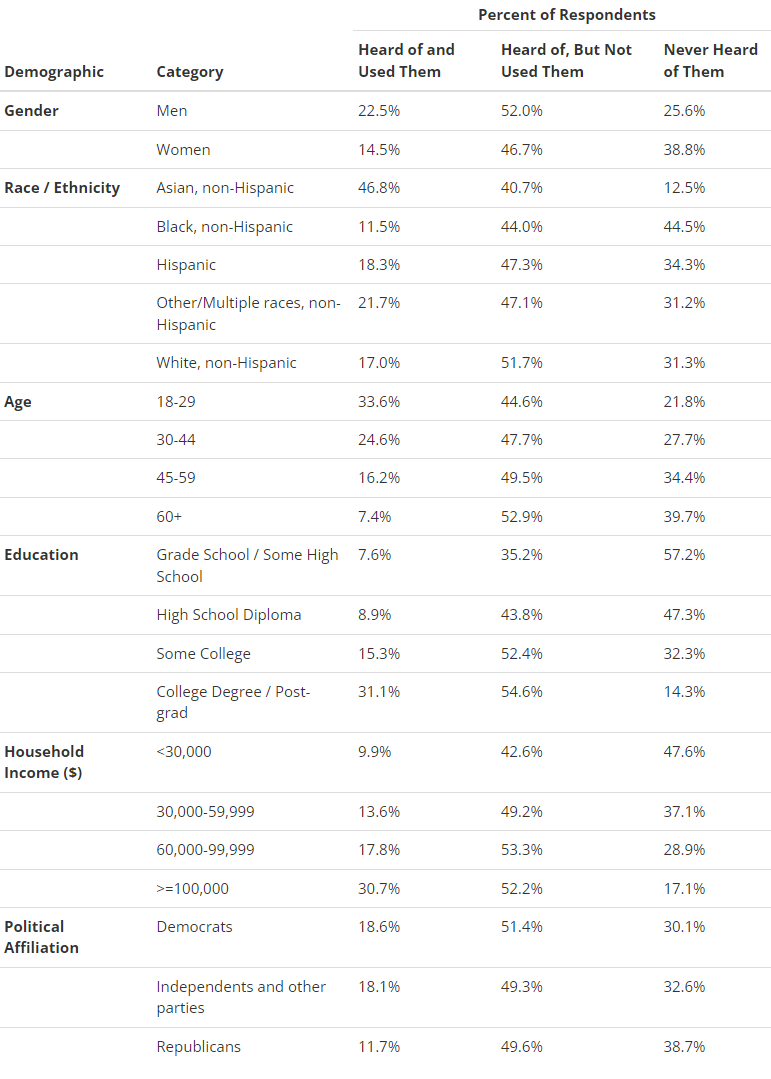

Early adopters of new technology tend to be younger and are slightly more likely to be men. In the table below, we report the percentage of people in each demographic and social category that have used, heard of but not used, or have never heard of chat-based AI tools. Consistent with past research on early adopters of AI technology, we see that early users of these chatbots are more likely to be men, non-Hispanic Asians, young, more educated, and wealthier. Additionally, Republicans are 35-37% less likely to have heard of and used chat-based AI tools than Democrats, Independents, and members of other parties are.

In Summary

The number of US adults currently using chat-based AI applications is just 18% of population, but adoption is growing rapidly. Curiosity is the dominant reason people say they use these chatbots, but could reasonably be expected to shift to other reasons once they learn of the wide-range of use cases. The positive sentiment that these applications are useful expressed by the vast majority of users suggests that these applications are likely to retain current and continue to attract new users.

Despite the excitement surrounding these applications, about half of the US adult population believes that these applications may be harmful. These concerns are reasonable as these chatbots are based on large machine learning models that are fed massive amounts of text data and then learn to generate text similar to what it was fed when being trained. In other words, these models tend to reflect the social norms of societies, and those norms are sometimes discriminatory, prejudicial, and upsetting. One way to address this is through constitutional AI -- a type of large-language model that starts by defining desired normative values, evaluating training content for how aligned it is with those normative values, and then producing responses closer to those values -- gained steam in 2023. Anthropic trained its chatbot, Claude, in this manner to mitigate against some of the harmful behaviors observed from other chatbots, like ChatGPT.

Artificial intelligence received much attention in 2023 as its tools were released to the broader public. Yet, given the estimated market value that may exceed $1.3T in the next 7 years and the rapidly improving technology, it will likely continue garnering massive investments and play an increased role in daily life. We will continue to monitor adoption, usage, and sentiment over time.