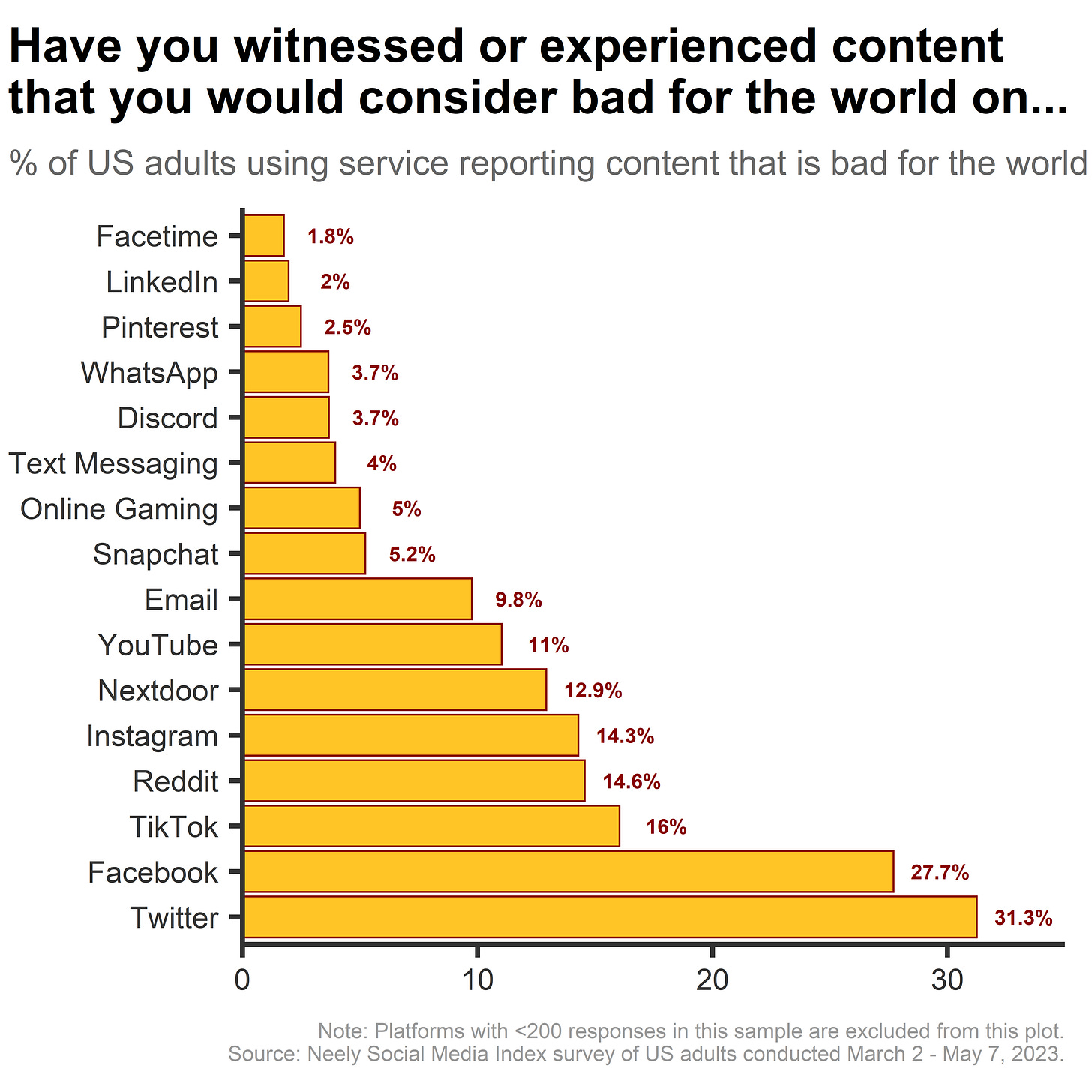

Users Report Seeing More Content that is Bad for the World on Twitter and Facebook than on other Social Media Platforms

Two weeks ago we unveiled the Neely Social Media Index and reported the top-level results for US adults’ experiences with social media and communication services. In the next few posts, we go a click deeper into each of those initial findings. Today, we explore user experiences with content that they would consider bad for the world.

A growing majority of adults in many countries use at least one form of social media, and most people use it every day. Some estimates suggest that adults may spend 20 or more hours every month on social media. With this kind of usage, it is not surprising that it has far-reaching effects on society. Some of the effects, undoubtedly, are quite positive. Yet, there is no shortage of abuses of these platforms that have played a role in some tragic societal events ranging from Russia’s interference in the 2016 US election to facilitating a military’s genocide against the Muslim Rohingya people of Myanmar.

Today’s post focuses on the potentially harmful content that users perceive as bad for the world. In our nationally representative UAS panel survey of US adults, we found that Twitter and Facebook were the platforms with the highest percentage of users reporting seeing content that they deemed bad for the world. The rates at which users of Twitter and Facebook reported seeing content that they deemed bad for the world was 2.8x - 3.1x times higher than the rate at which people reported this type of content in their use of email (31.3% and 27.7%, respectively vs. 9.8%). This rate is even more extreme when comparing these platforms to their job-finding social media counterpart LinkedIn. Specifically, Twitter users were 15.65x and Facebook users were 13.85x more likely to report experiences with content that they deemed bad for the world (31.3% and 27.7%, respectively vs. 2%).

This analysis is informative in giving a general rank ordering of the social media and communication services where users report having the most experiences with content that they perceive as bad for the world. Yet, it is a broad question and doesn’t tell us much about the actual content and how that content is bad for the world (in each platform user’s eyes). Therefore, we asked them three follow-up questions and report on those data next:

Did your experience on [insert platform name here] relate to any of these topics? Check the box next to all that apply.

Medical/health information

Politics

Crime

Local news

Personal finance

None of the above

What negative impact do you feel your experience(s) with [insert platform name here] could have on the world? Check the box next to all that apply.

It could increase hate, fear, and/or anger between groups of people

It could increase polarization

It could increase the risk of violence

It could misinform or mislead people

It likely would not have much of an effect

Other

In a sentence or two, please describe one experience on [insert platform name here] with content that you would consider bad for the world.

What topics best describe the content that users perceive as bad for the world across social media platforms?

To write question #1, we identified topics that prior research has found tends to be seen as bad for the world. But even within these topics, we expected to see variance in how much people identified them as being bad for the world. For example, content that pertains to politics and global health pandemics may have a higher risk of being “bad for the world” than content pertaining to personal finances. Indeed, collapsing across platforms, we see that content that is flagged as bad for the world is nearly twice as likely to be about politics (65.06%) than it is to the runner-up topic, crime (35.14%). And, personal finance (14.34%) is the least likely topic that we asked about to be selected as describing content that users perceived as bad for the world.

Given that these platforms were designed to serve different purposes and audiences, it is reasonable to assume that content that users view on these platforms also addresses different topics. For example, NextDoor is a neighborhood-focused platform whereby local communities might be more inclined to share crime-related information and have discussion over local news. In contrast, Twitter’s owner, Elon Musk, describes that platform as “a common digital town square, where a wide range of beliefs can be debated…”

In the heatmap below, we see the percentage of users for each platform who reported an experience with content that they perceived as bad for the world and stated that it related to five different topics. Again, content that users perceived as bad for the world was more likely to be political than any of the other topics we asked about in our survey. Yet, there is considerable variability in the proportion of users reporting experiences that they perceived as bad for the world across the platforms.

Twitter and Facebook users were at least twice as likely as users of other platforms to report having an experience with content they deemed as bad for the world and to say that the content pertained to politics. While Twitter and Facebook generally had the highest rate of bad for the world experiences as users relative to other platforms, regardless of topics, there is also informative variability within platforms. For example, the content on NextDoor that users were most likely to perceive as bad for the world was related to crime.

This analysis is informative in that it shows us that users perceive more potential harm stemming from some topics than others. This may have contributed to efforts at some of these companies to simply reduce the amount of the riskiest content, like Facebook did when they announced they wanted to reduce political content in news feed. Yet, we can still go deeper as we try to understand what users fear the impact of this content is.

What do users think the impact of content that is bad for the world on these platforms is?

The majority of US adult users of social media thought that content that was bad for the world would:

Misinform or mislead people (69.14%),

Increase hate, fear, and/or anger between groups of people (61.01%), and

Increase political polarization (52.81%)

These findings are consistent with quotes taken from internal research documents at Meta that were leaked to the Wall Street Journal. Specifically, “the primary bad experience was corrosiveness/divisiveness and misinformation in civic content,” and not simply with political content, in general.

In the heatmap below, we can see these potential impacts across each of the platforms. Again, Twitter and Facebook users displayed the highest rates of concerns that the content that they experienced on those platforms were more likely to misinform or mislead people, increase hate, fear, and/or anger between groups of people, and increase polarization. The rates for these two platforms is significantly higher than for all other popular platforms.

In Summary

These data highlight considerable variability in users' experiences with content that they perceive to be bad for the world across different social media platforms. Facetime, LinkedIn, Pinterest, WhatsApp, Discord, and Snapchat users report far fewer experiences on those platforms as bad for the world than Twitter and Facebook users. This may help explain why the majority of Twitter users report having taken a break from the platform in the past year and why the number of daily active users on Facebook has stopped growing for the first time in the company’s history in 2022. Given these headwinds and external pressures, many of these have conducted mass layoffs, which some reporting suggests disproportionately targets the experts working to protect users from content that poses societal risk.

Yet, if these layoffs have disproportionately affected the staff with expertise in promoting safety and integrity, user experiences with content they perceive as bad for the world may grow. If those negative experiences continue growing, we may see an increased exodus of users from the social media giants to new platforms that emphasize their commitment to creating a safe and trustworthy community where people can connect with each other (T2, New Public). In subsequent months, as more waves of our longitudinal survey data come in, we will be able to see whether these experiences change over time.

One recommendation that may stem the tide for these social media giants is to do more to combat the subset of content that users feel is bad for the world, particularly the content that is likely to misinform and divide people. This could be accomplished by developing machine learning models and leveraging artificial intelligence tools to predict what content is most likely to be bad for the world, like toxic and untruthful content, and then decreasing the distribution of that type of content. Some of these companies, like Facebook, have already built these models and found that demoting content based on those models reduced users’ exposure to harmful content; however, Facebook opted not to launch that change into their ranking algorithm because it lowered the number of times Facebook users were opening the app in the short time frame where they were evaluating the proposal.

In conclusion, users report seeing more content that they believe is bad for the world on Twitter and Facebook than on all other social media platforms. Much of the content that users perceive as bad for the world pertains to politics, and most users with these experiences believe that this content could divide and polarize society, fuel hate between groups of people, and misinform people.