What on social media is bad for the world?

Reports of seeing content that is bad for the world are down on Facebook, and reports of content that could increase the risk of violence are up on X (Twitter).

Last year, we found that US adults who used X (Twitter) and Facebook were 2-3x more likely to see content on those platforms that they considered bad for the world. Since then, many real-world events like the Russian invasion of Ukraine have persisted, and new tragic events like the Hamas attack on Israel and the humanitarian crisis that emerged following Israel’s response, have emerged. Social platforms, like traditional news media, can serve as a digital battleground where involved parties spread disinformation and propaganda. Moreover, these platforms shape what information gets distributed to potentially billions of people, and they tend to distribute content that gets the most clicks, likes, comments, and reshares further. One problem with these engagement-based recommendation systems is that disinformation, misinformation, and toxic content tends to receive more engagement than its more factual and civil counterparts. These types of ranking systems lead to more people encountering this type of harmful content that most users tend to describe as “bad for the world.”

In today’s post, I examine whether US adults’ experiences with content that they consider bad for the world has changed over the past year, and whether that varies by social media or communication platform. I’ll also examine how people describe these experiences and what impacts people believe these experiences have on the world.

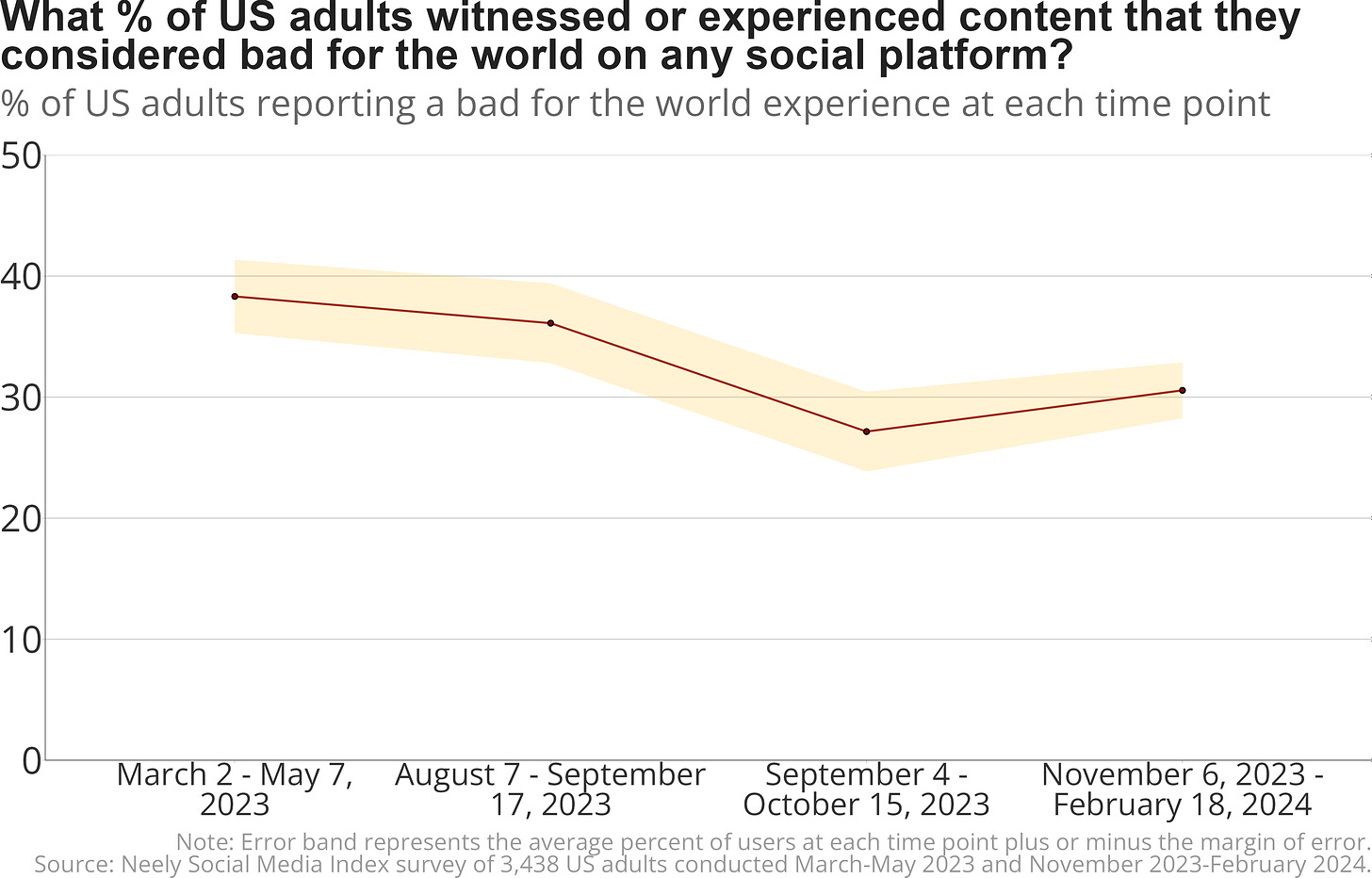

In the graph below, I plot the percentage of US adults who reported encountering content that they considered bad for the world on any of the social platforms that they used that were included in our survey (full list, plus Threads, may be found here). These societally-oriented negative experiences decreased between March and October of 2023, before increasing in our latest wave that concluded in February 2024. The current full sample percentage of people reporting these negative experiences on any platforms is still significantly less than the year ago time point, but is significantly higher than in the previous survey wave. In the most recent survey wave, 1 in 3 US adults reported encountering content that they considered bad for the world.

The overall decline in these experiences with content that users consider bad for the world could mean that these experiences are1:

declining on all platforms equally,

declining on some, but not all platforms, or

declining on some platforms while increasing on other platforms.

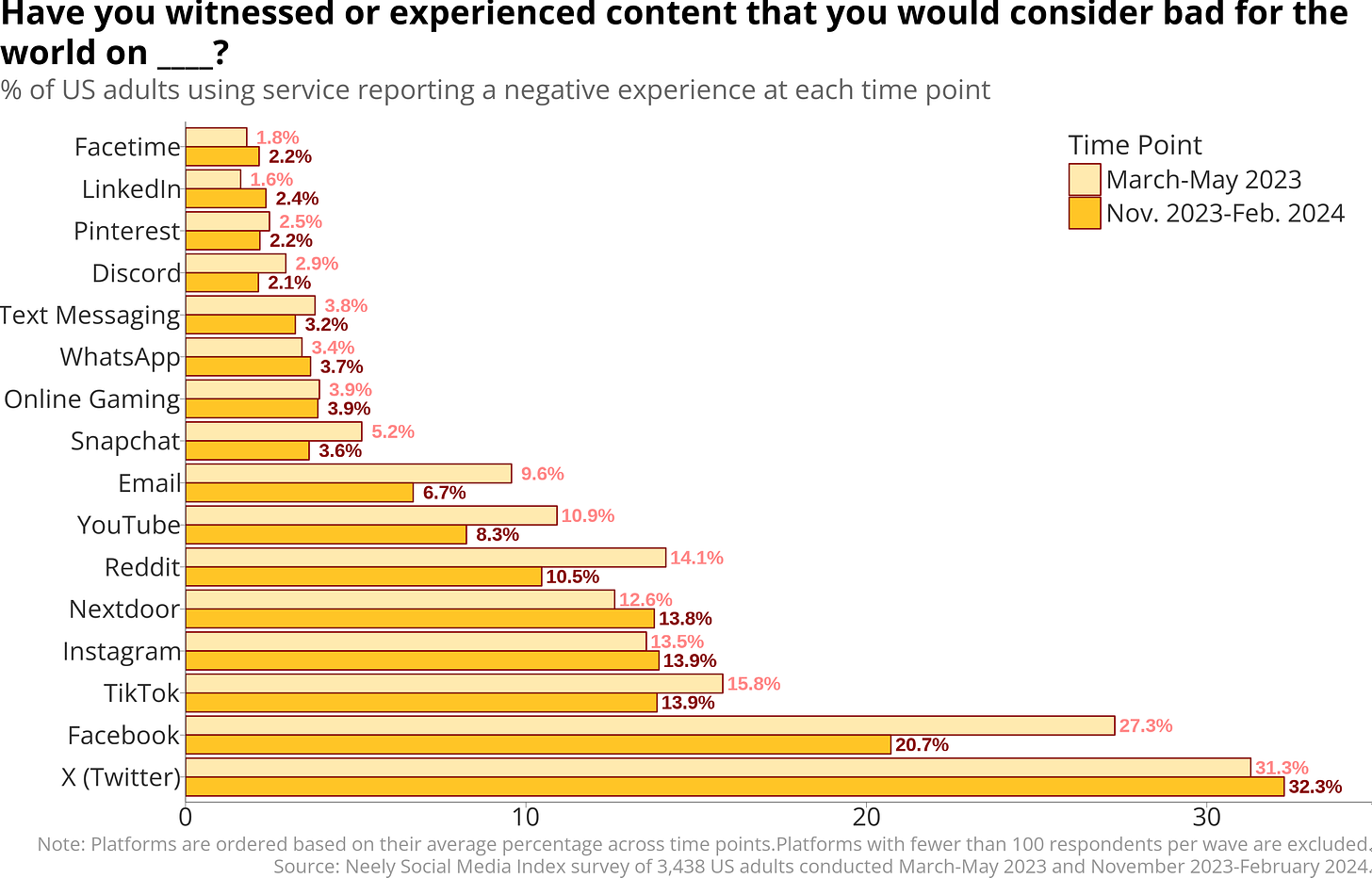

In the graph below, I display the percentage of users of each platform who reported an experience they considered bad for the world and included the year-ago wave and the latest wave. Most platforms showed a slight decrease in these negative experiences; the only platforms or services showing a significant decrease are Email and Facebook. Despite Facebook’s significant decrease, the platform still has significantly more users reporting encountering content that is bad for the world than any other platform besides X (Twitter). X (Twitter) not only maintained its position as the platform with the highest rate of users reporting experiences that are bad for the world, it actually slightly increased its rate of users having these experiences. Facetime, LinkedIn, Pinterest, Discord, Text Messaging, WhatsApp, Online Gaming, and Snapchat were all tied for having the lowest rate of experiences users considered bad for the world of all platforms surveyed (plus or minus the margin of error).

The above analysis is instructive in showing the general trend of bad for the world experiences decreasing over the year, and across some platforms, but it does not tell us what kinds of content users tend to consider bad for the world, or what they believe the effects of this content are on the world. Next, I examine two follow-up questions we asked of our survey panelists who reported having one of these experiences on these platforms.

What topics best describe the content that users perceive as bad for the world across social media platforms?

Based on prior research, we asked US adults whether their experience pertained to one or more of 5 topics often related to content that is viewed as bad for the world. When users reported encountering content that they considered bad for the world, they were most likely to say that the content was related to Local News (22.7%) and/or Politics (21%). Next most commonly, users stated the content was related to Medical/Health Information (17.7%) and/or Crime (16.5%). Less than 5% of US adults who had one of these experiences indicated that the content was related to Personal Finance (2.6%) or Something Else (3%). Notably, Politics, Medical/Health Information, and Local News were selected less often between November 2023-February 2024 and March-May 2023.

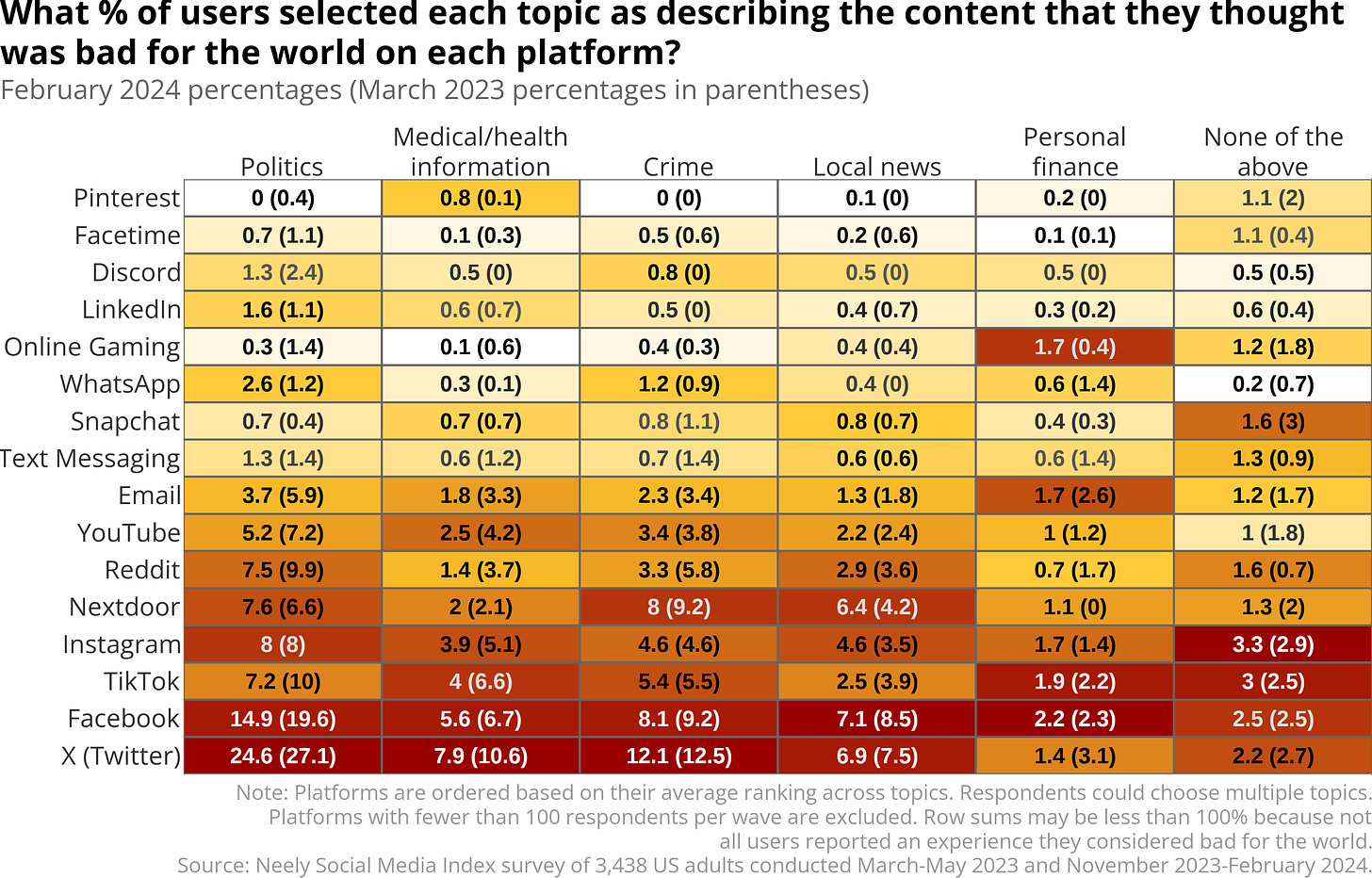

In the heatmap below, I’ve summarized those same percentages, but broken down separately for each of the platforms included in our survey. Cells are shaded based on each platform’s rank within each topic. For example, X (Twitter) has the highest rates of users selecting Politics, Medical/Health Information, and Crime as topics characterizing their experiences that they consider bad for the world, so those tiles are printed in the darkest red. By contrast, Pinterest has the lowest rates of users selecting Politics, Crime, and Local News, so their tiles have a white background.

Overall, the traditional social media platforms, X (Twitter), Facebook, TikTok, and Instagram, tend to have the highest rates of these bad for the world experiences, mostly regardless of topic. About 1 in 4 X (Twitter) users report encountering political content that is bad for the world. The neighborhood-focused social media platform NextDoor has a higher rate of users flagging content as being Crime- and Local News-related than most other platforms (except X and Facebook). By comparing the numbers printed in each heatmap tile to the adjacent number in parentheses, we can see that Facebook, TikTok, and Email all showed decreases in the percentages of users saying their experience was related to Politics.

This analysis is instructive in that it shows that US adults are more likely to say content that they consider bad for the world is more likely to be related to Politics than any other topic. This may serve as the basis for some companies, like Meta, publicly announcing that they are actively trying to demote political and news content. Yet, we can still go deeper to understand what impact people believe this content is likely to have on the world.

What do users think the impact of content that is bad for the world on these platforms is?

Collapsing across all platforms, we see that more than 1 in 5 US adults believed that content they saw on any of the 20 platforms included in our survey would:

Misinform or mislead people (22.7%)

Increase hate, fear, and/or anger between groups of people (21%)

And, more than 1 in 6 US adults believed that content they saw on these social platforms would:

Increase political polarization (17.7%)

Increase the risk of violence (16.5%)

The rate of people noting the risk of violence as an impact of content they saw online held steady over the year, but all other impacts were selected less often from the year-ago period.

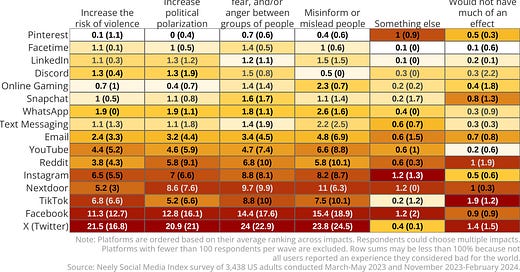

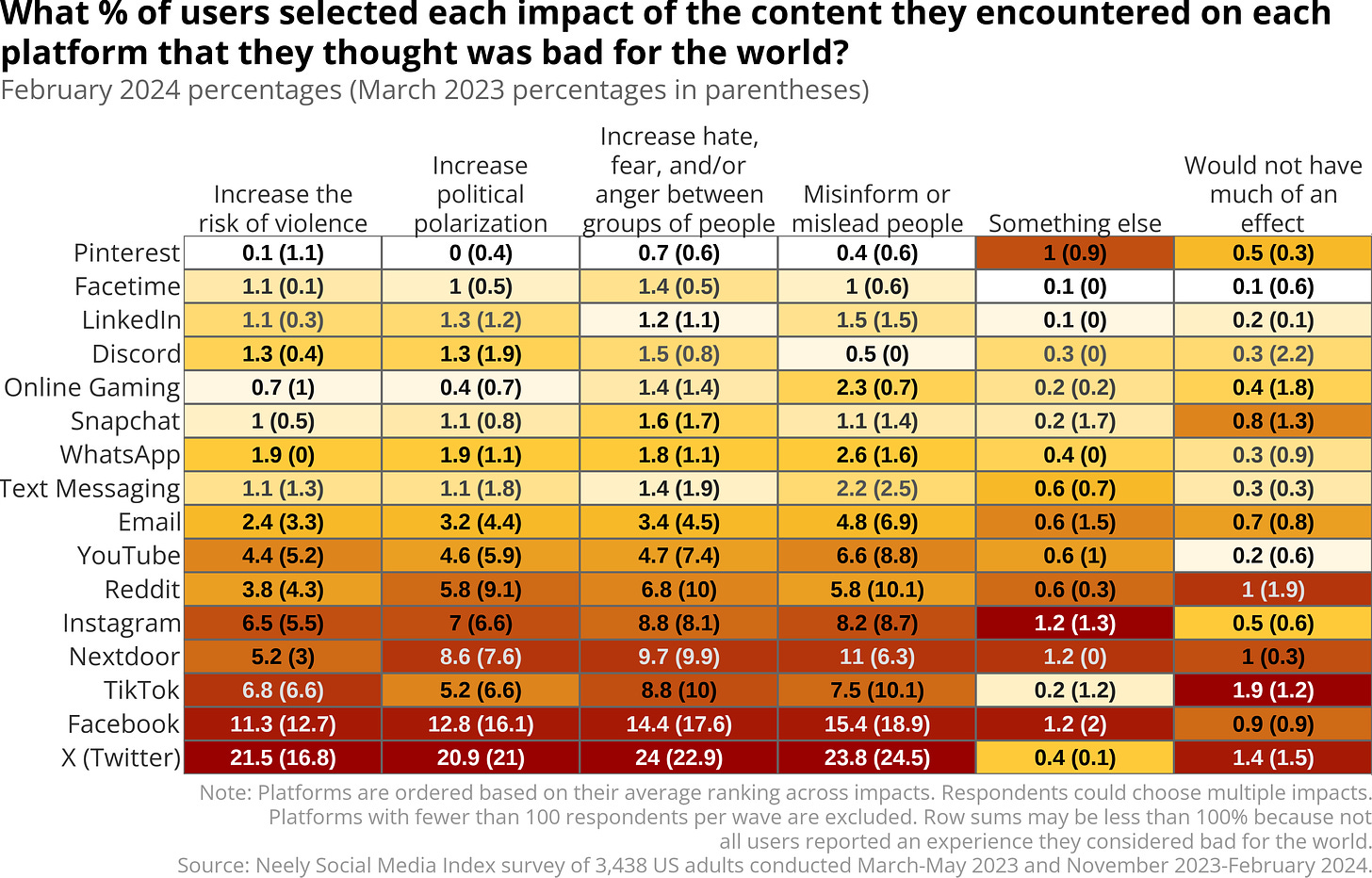

When examining these by platform, we see in the heatmap below that X (Twitter) has the highest rates of users fearing all impacts besides “Something Else” than all other platforms. With significantly lower rates than X (Twitter), are the other traditional social media platforms (e.g. Facebook, TikTok, Instagram), which also tend to be higher than more special interest-oriented apps (e.g., Pinterest) and more general communication services (e.g., Facetime). The only platform displaying a significant increase in the rate of users flagging any of these impacts is X (Twitter), where users are more likely to say that content they see on the platform increases the risk of violence.

In Summary

These data show that US adults are encountering less content that they consider bad for the world collapsing across all social platforms than they did 1 year ago. Yet, these data highlight different trajectories for different platforms. For example, X (Twitter) has generally held steady as the platform with the highest rates of experiences that are bad for the world, but X (Twitter) users are actually more likely to say they encounter content on that platform that increases the risk of violence in society than they were a year ago. On the other hand, users of platforms and services like Facebook, YouTube, Reddit, and Email are all less likely to report encountering content that they consider bad for the world than they were a year ago.

The decreasing prevalence of users identifying Politics and Local News as describing the content they considered bad for the world may be due to some product decisions made by Meta, parent company of Facebook and Instagram, to get rid of their News Tab and to actively demote politics-related posts. Likewise, the inaction taken by X (Twitter) following studies showing rising rates of hate and antisemitism, may have made that platform more appealing to purveyors of hateful and violence-inciting content. We will continue to monitor these trends and see whether changes in product strategy or launches of new features change these platforms’ trajectories.

In our next report, I will shift from societally-focused negative experiences towards individual-focused negative experiences. Subsequent reports will shift to looking at positive experiences, like whether people are learning useful and important things or forging meaningful social connections on these platforms, and how those experiences may be changing over time. As always, I welcome all questions and suggestions.

One other possibility not in this list is that these experiences are not decreasing, but rather that respondents are learning that by answering in the affirmative that they will be asked additional questions which may motivate them to underreport experiences. This explanation is highly unlikely because (a) experiences are not uniformly down, (b) respondents who report an experience in one survey wave are more likely to report experiences in other survey waves, and (c) experiences are down primarily on a couple of platforms which launched major product initiatives regarding content moderation and political content which are directly connected to the types of experiences that social media users tend to describe as bad for the world.